Overview

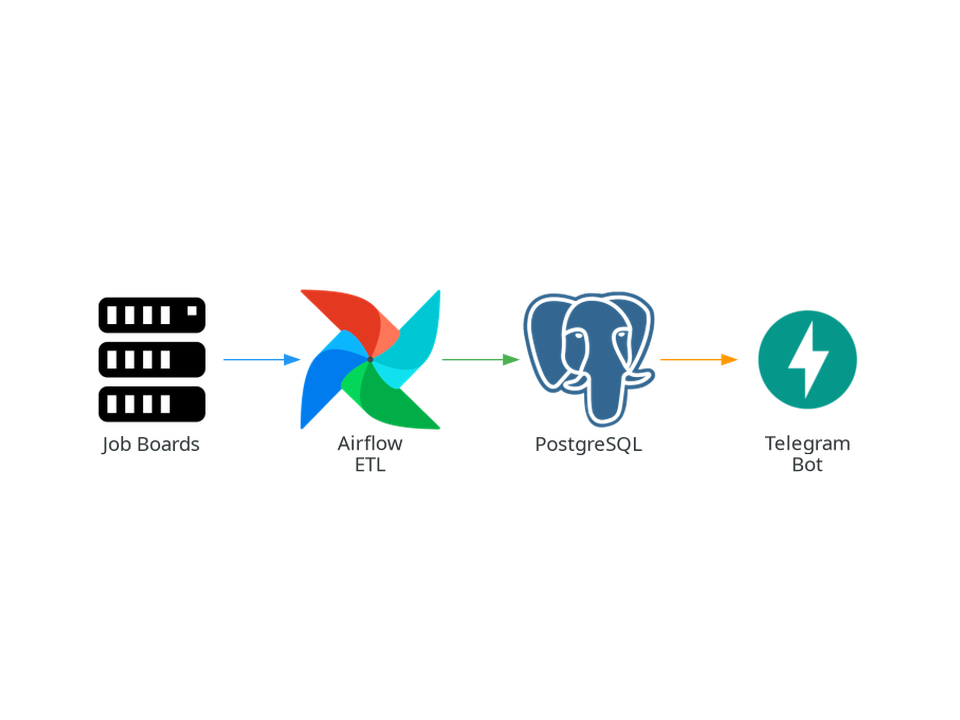

A production-ready data engineering platform that scrapes, processes, and delivers personalized job notifications. Built with Apache Airflow for orchestration, the system automates the entire pipeline from data collection to intelligent matching and notification delivery.

Key Achievements:

- 90% reduction in job discovery time through automation

- 99.5% uptime with automated health monitoring

- Thousands of jobs processed hourly from multiple sources

Technical Architecture

Orchestration: Apache Airflow 3.1.0 with separated DAG Processor

Storage: MinIO data lake (S3-compatible) + PostgreSQL 16 warehouse with full-text search

Application: FastAPI backend with intelligent matching engine and multi-language support

Infrastructure: Dockerized services with resource limits and health checks

Core Features

- Web scraping from multiple job boards with rate limiting and retry logic

- ETL pipelines with staging/production workflow and hash-based deduplication

- Intelligent matching using full-text search and user preference filtering

- Real-time notifications with zero-duplicate tracking

- Production engineering: Safe restart scripts, automated cleanup, log rotation

Performance Highlights

- 99.8% reduction in Airflow metadata size through XCom optimization

- 70% storage savings with Parquet compression

- 10-100x faster queries using PostgreSQL GIN indexes

- Processes thousands of jobs in under 5 minutes

Technical Challenges

Preventing Redundant Runs: Implemented safe shutdown scripts that pause DAGs before restart to avoid queuing missed schedules

Cross-Platform Deduplication: Built hash-based deduplication using normalized job descriptions to eliminate duplicates across sources

Memory Management: Applied resource limits per service and DAG serialization to prevent excessive memory consumption

Technologies

Apache Airflow 3.1.0 • Python 3.12 • PostgreSQL 16 • MinIO • FastAPI • Docker Compose • Requests • BeautifulSoup • Pandas • SQLAlchemy • Boto3